Clinical Evaluation online: Implementation of a clinical evaluation tool into a web-based format for baccalaureate nursing students

by Jacqueline Ellis1, RN, PhD

Stephanie Langford1,RN, MEd

Joseph Mathews2, BEng (C)

Frances Fothergill Bourbonnais1, RN, PhD

Diane Alain1,RN, MSN

Betty Cragg1, RN EdD

1 University of Ottawa, School of Nursing, 451 Smyth Rd, Ottawa, Ontario, Canada K1H8M5

2 University of Ottawa, School of Information Technology and Engineering, 800 King Edward Avenue, Ottawa, Ontario, Canada, K1N 6N5

Abstract

A web-based approach to practicum evaluation was phased into the curriculum for students in the University of Ottawa BScN program in Ontario, Canada. The evaluation tool used by students and clinical professors since 2003 forms the structure for the Clinical Evaluation Online (CEO) interface. Moving to a web-based format allowed faculty to eliminate paper copies of evaluation reports and store data in a format that generates reports that describe trends in student performance

The pilot group involved 24 students and 4 clinical professors. After using CEO for a semester, students completed the System Usability Scale (SUS) and both students and professors provided feedback about the system. CEO scored an average 68/100 on the SUS which is associated with the descriptor ‘good’ on the adjective scale. Overall, the CEO was found to be user friendly but in need of improvements. The program faculty are currently updating the system to meet these needs.

Keywords: clinical evaluation, electronic documentation, usability testing

Introduction

A clinical evaluation tool was developed and leveled for all years of a BScN program offered on three sites in two languages at the University of Ottawa in Ontario, Canada. The impetus for the development of this tool was to ensure consistency in the process of clinical evaluation for both clinical professors and students. In addition, a tool that was leveled allowed for demonstration of progression in depth and scope of practice as students move from year to year. This practicum evaluation tool has been used by all students and clinical professors since 2003 (Fothergill Bourbonnais, Langford, & Giannantonio, 2008). Students and clinical professors have had access to the tool as an MS Word document in order to type their respective evaluations but the final product submitted was in paper format.

The introduction of a web-based approach to clinical evaluation was phased into the curriculum with students enrolled in the first practicum course in a newly revised curriculum during the academic year 2008-2009. The system was piloted and evaluated with these students and their clinical professors with the expectation that this group of students would use only the web-based mode for clinical evaluation. Thus they would adjust to the new technology with a clinical evaluation form that was well known to the clinical professors and had been used for many years.

The purpose of this paper is to describe the Clinical Evaluation On-line (CEO) system with respect to the implementation and preliminary evaluation.

Background

Evaluation is an integral part of clinical practice for nursing students at the undergraduate level. Evaluation reports that reflect student clinical achievement are typically program specific and are used to track student progress in most schools of nursing across North America (Oermann, Yarbrough, Saewert, Ard, & Charasika, 2009).

A clinical evaluation tool that is comprehensive, logical, easy to understand and readily accessible can provide a framework for student learning, clinical teaching, and curricular evaluation. Students reflect on their clinical practice guided by clearly defined behavioral indicators that support clinical learning outcomes. Learning outcomes such as “practices in a safe manner” and “demonstrates professional accountability” are essential to patient safety and must be maintained for students to remain in the clinical area.

In this Canadian university nursing program, each practicum course requires both formative and summative evaluation supported by data from multiple sources such as anecdotal reports, care plans, and direct observation of student practice. A final summative evaluation report from both the student and clinical professor is a required element in all practicum courses and subsequently becomes a permanent part of students’ academic records.

Since 2003, this BScN program has used a standardized and leveled clinical evaluation tool for all undergraduate practicum courses (Fothergill Bourbonnais et al., 2008). The evaluation tool is organized according to the five BScN program outcomes adopted by this university which include: self directed learner (Patterson, Crooks, & Lunyk-Child, 2002); effective communicator (Arnold & Boggs, 2003); critical thinker (National Council for Excellence in Critical Thinking Instruction, 1992; Scriven & Paul, 2006); knowledge worker (Drucker, 1983), and evolving professional (College of Nurses of Ontario (CNO), 2005; 2009; 2010). From these five program outcomes, 12 clinical outcomes were determined. Each clinical outcome was further defined by clinical practice indicators that reflect RN entry to practice competencies. Theses indicators were based on the CNO entry to practice competencies (2005; 2009; 2010) as well as other indicators deemed important by professors in each practicum course.

At the start of each practicum course, the professor and students review the expected clinical outcomes and clinical practice indicators outlined in the evaluation tool and the context-specific outcomes for the practicum course such as those that are important for maternal-newborn care, child health or mental health. This teacher-student dialogue begins the ongoing formative component of the clinical evaluation process used at the university. Students must demonstrate satisfactory performance in each of the 12 clinical outcomes in order to pass the practicum courses. The detailed clinical practice indicators help both students and professors to recognize the behaviors expected of students leveled according to year of the program.

Motivation

Our BScN program has increased in student enrollment and scope over the past decade. Currently we offer the program in English and French at 3 campuses to approximately 1200 students. The curriculum has 8 practicum courses, each with 117 hours of lab and clinical practice activities. The decision to move to a web-based format was motivated by a number of factors including increasing student numbers and the desire to eliminate paper copies of evaluation reports. The 6-page paper-based tool required printing, photocopying, filing, distributing, collecting and redistributing to students and clinical professors. This was a time-consuming and expensive process that did not enable tracking of student performance for the purpose of evaluation in order to further student progression.

A paper-based evaluation format had been adequate as the basis for student-teacher discussions of clinical performance and for decisions about course success or failure; however this paper format had some limitations for providing an overview of clinical performance across all clinical courses. A database format will enable us to generate reports that illustrate trends in student performance per course and per year. This information will be helpful in evaluating course objectives, clinical outcomes and the practice indicators to determine the relevancy to student clinical practice.

Clinical Evaluation Online (CEO)

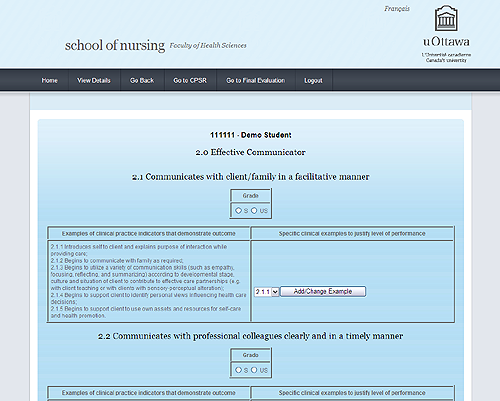

Students and clinical professors enter the CEO evaluation web portal with a unique user name and password either in French or English. The clinical evaluation tool described above forms the structure for the CEO interface. The initial screen has the five program outcomes displayed as links to the 12 clinical outcomes, with accompanying data fields. The 12 clinical outcomes have examples of clinical practice indicators, which are used as prompts by the professor and student to provide examples in the textbox to describe student performance. One clinical example of student performance may reflect several indicators. The clinical professor provides an overall rating of satisfactory or unsatisfactory for each of the 12 clinical outcomes. Figure 1 provides a view of the program outcomes and some of the clinical practice indicators.

The students and clinical professors create their evaluations independently and only when they are completed can they be viewed together. Subsequently, there are two parallel evaluations produced simultaneously. When teacher and student have finished their respective evaluations they select a button labeled ‘Send for Review’ which creates a dual screen whereby both sets of evaluation comments are presented side-by-side. (See figure 2). This ‘dual screen’ view is accessed in the professor’s CEO program during the evaluation interview when the student and clinical professor meet to discuss student performance. A clinical progress summary report (CPSR) is also shared during this face-to-face interview. It is the CPSR that is passed on electronically to the student’s next practicum teacher. Prior to CEO, students provided their next clinical professor with a paper copy of their CPSR.

Student performance in the 12 clinical outcomes is reviewed during the student-professor interview. Evaluation details added by the clinical professor only include hours absent due to student illness, completion of course requirements including weekly anecdotal notes and written assignments such as a patient care plan. A learning plan must be completed if student performance is unsatisfactory in any of the 12 clinical outcomes at mid-point in the practicum. The actual text of a learning plan could be included as part of the student’s CEO profile as well.

There is a text box at the bottom of the clinical professor’s evaluation in the dual screen view for additional information that may be generated during the evaluation meeting. For example, a student may not agree with the clinical professor’s evaluation comments and would like to add a different perspective on a particular incident or practice indicator. Both the student and clinical professor have the option of adding text prior to finalizing the evaluation. Once the evaluation meeting is completed and the student and professor jointly agree on the comments, the evaluation is submitted to the CEO database. At this point the link to the student’s evaluation disappears from the professor’s desktop. However, students have access to all of their practicum course evaluations over the duration of the program.

CEO Technical Information

The CEO database was designed with and currently runs on MySQL 5.0. The interface and database design were deliberately created as generic as possible to accommodate potential revisions to the evaluation tool while at the same time optimizing data retrieval. The database has a high degree of scalability to accommodate increasing student numbers and the addition of programs from our collaborative partners.

The interface and web design for CEO is CSS 2.1 compliant and the dynamic web pages were coded with the scripting language PHP 5.0. CEO has a fixed width design which will fit a number of screen resolutions including Netbooks and tablet computers. CEO can be viewed in all modern browsers including Internet Explorer 7+, Firefox, Chrome, Opera and Safari.

Although the current site is offered in French and English with a bar to toggle between languages, it can easily be adapted to accommodate other languages.

Clinical evaluation data is stored in a secure server with limited, streamlined access, event logging and a daily back up service. In addition, the application is secure against all major web attacks including XSS, CSRF, and SQL injection. The CEO site requires minimal maintenance from the database manager and several administrative tools scripted into the system make complex or frequent tasks doable in an efficient and timely manner. Email support from the database administrator is provided to users having difficulty using the system. The most frequent questions were related to user login and creating new evaluations. These types of questions were expected from first time CEO users or those who were new to online computing.

CEO pilot implementation and usability evaluation

A CEO demonstration site was developed to pilot test initial versions of the web-based system. Clinical professors and selected students navigated the demonstration site in the computer lab and provided feedback that informed successive iterations of the tool. The first user-group involved 24 students and 4 clinical professors. This small student cohort was part of a new program where students with a previous university degree were admitted into year two of the current program. The small student numbers provided an opportunity to pilot the system and track the strengths and challenges before implementing with classes of greater than 150 students.

The first cohort of 24 students and 4 clinical professors provided anecdotal feedback about CEO throughout the practicum. In addition, students completed the System Usability Scale (SUS) after completion of the practicum course. The SUS is a 10-item scale that assesses a global view of subjective assessments of usability (Brooke, 1996). Questions relate to 3 components of usability: effectiveness, efficiency and satisfaction. Items are scored on a 5-point Likert scale with descriptors from ‘strongly disagree’ to ‘strongly agree’. Final scores for the SUS can range from 0 to 100, where higher scores indicate greater usability. The SUS has good face validity and correlates highly (Cronbach’s alpha 0.91) with longer usability scales (Bangor, Kortum, & Miller, 2008).

Twenty-four students completed the SUS and the average usability score was 68/100. In order to interpret the SUS score across different products or systems and different iterations of the same product, Bangor and colleagues (2008) created a one question adjective scale that correlates with SUS scores to determine overall usability. The question, ‘Over-all I would rate the user friendliness of this product as: ‘worst imaginable’, ‘awful’, ‘poor’, ‘OK’, ‘good’, ‘excellent’, ‘best imaginable’. A correlational analysis indicated that the rating using the adjective rating scale correlated highly (r = 0. 82) with participants corresponding SUS score (Bangor, Kortum, & Miller, 2009). A SUS mean score of 68 is associated with the descriptor ‘good’ on the adjective scale. Therefore, the authors were confident that students and clinical teachers found the CEO quite user friendly but that improvements could be made.

Based on anecdotal feedback from clinical professors, students, the software developer (VM), and the design team, substantial revisions were made to the pilot version of CEO to make it easier to navigate and document information, as well as more robust to user errors. The system is now used to evaluate our BScN students in all of the practicum courses. This involves over 1000 students and 130 teachers on 3 campuses practicing in a number of healthcare organizations.

Lesson learned and next steps

Many of our clinical professors have over 25 years of clinical experience and are expert clinicians and teachers but were not comfortable with the web-based aspect of the evaluation tool. In contrast, most of our students are quite comfortable with technology and have come to expect it to be used in all aspects of their clinical practice. As more faculty and students started using the system it became clear that orientation sessions and just-in-time support for the clinical professors was key to successful adoption of CEO. To provide on-going and adequate support to professors and students a member of the design team (SL) was designated as the CEO ‘champion’ and liaison between the design team, students, clinical professors and clinical coordinators. The champion role included: developing a PowerPoint presentation to be used for orientation sessions and distributed as a reference; troubleshooting user problems throughout the semester; tracking potential system design changes based on user problems; and finally, the development of a ‘frequently asked questions’ section to be posted on CEO and clinical practicum course websites.

Easy and reliable access to CEO from the university campus, the clinical agencies or student and professors’ homes was critical because students and clinical professors were instructed to use the site daily or at least weekly to document clinical progress. Initially, there were problems accessing CEO from off campus due to firewalls which were in place at area hospitals. Access to a computer was essential for the final evaluation meeting and this was problematic for clinical professors that did not have their own laptop computer or did not have access to a computer in the clinical agency. The school purchased 5 laptop computers that can be borrowed from the secretary’s office as needed for the final evaluation meeting. This has helped ease the scheduling difficulties that come at the end of the semester when most of the final evaluation meetings occur.

CEO database information may help in examining which clinical indicators are most frequently cited by clinical professors and students in a review of all the indicators. In addition, the CEO database could assist with curriculum evaluation specific to practicum courses.

Conclusion

Clinical evaluation in a web-based environment was phased into all practicum courses in our baccalaureate nursing program. The implementation process has been relatively problem free and the key to success has been just-in-time support to solve both user and system problems. Through an iterative process of feedback and revision we are updating the system so that it meets the needs of our students and clinical professors.

References

Arnold, E., & Boggs, K.U. (2003). Interpersonal relationships: Professional communication skills for nurses. (4th ed.). St Louis, MO: Saunders.

Bangor, A., Kortum, P. T., & Miller, J. T. (2008). An empirical evaluation of the System Usability Scale. International Journal of Human-Computer Interaction, 24(6), 574-594.

Bangor, A., Kortum, P. T., & Miller, J. T. (2009). Determining what individual SUS scores mean: Adding an adjective rating scale. Journal of Usability Studies, 4(3), 114-123.

Brooke, J. (1996). SUS: A “quick and dirty” usability scale. In P. W. Jordan, B. Thomas, B. A. Weerdmeester, & I. L. McClelland (Eds.), Usability evaluation in industry (pp. 189-194). London: Taylor & Francis.

College of Nurses of Ontario. (2005; 2009; 2010). Entry to practice competencies for Ontario Registered Nurses. Toronto: College of Nurses of Ontario.

Drucker, P. (1983). Post-capitalist Society. New York: Harper Business Publishers.

Fothergill Bourbonnais, F., Langford, S., & Giannantonio, L. (2008). Development of a clinical evaluation tool for baccalaureate nursing students. Nurse Education in Practice, 8, 62-71.

National Council for Excellence in Critical Thinking Instruction. (1992). Critical Thinking: Shaping the Mind of the 21st Century. Rodnett Park, California: Sonoma State University.

Oermann, M. H., Yarbrough, S.S., Saewert, K. J., Ard, N., & Charasika, M. (2009). Clinical evaluation and grading practices in schools of nursing: National survey findings part 11. Nursing Education Perspectives, 30(6), 352-357.

Patterson, C., Crooks, J., & Lunyk-Child, O. (2002). A new perspective on competencies for self-directed learning. Journal of Nursing Education, 41(1), 25-31.

Scriven M., & Paul, R. (2006). National Council for Excellence in Critical thinking. Retrieved from: http://www.criticalthinking.org/about/nationalCouncil.cfm

Author biographical statements

Dr. Jacqueline Ellis

is an Associate Professor at the School of Nursing, University of Ottawa and a Research Mentor for Nursing at the Children’s Hospital of Eastern Ontario. Dr. Ellis developed the prototype for Clinical Evaluation Online (CEO) and is currently the Chair of the taskforce that is responsible for overseeing its implementation.

Contact author: Jacqueline Ellis

Phone: 613 562 5800 x 8440

Fax: 613 562 5443

Email: jellis@uottawa.ca

Ms. Stephanie Langford

is a Teaching Associate and Chair of the Clinical Evaluation Taskforce for the baccalaureate program at the School of Nursing, University of Ottawa. She is a co-developer of the clinical evaluation tool. Since the implementation of CEO, Ms Langford has provided ongoing informational and technical support to students, clinical teachers and clinical coordinators.

Mr. Joseph Mathews

is currently a third-year student in the School of Information Technology and Engineering at the University of Ottawa. Mr. Mathews is responsible for the ongoing development of the CEO database, code and interface.

Dr. Frances Fothergill-Bourbonnais

is a Professor at the School of Nursing, University of Ottawa. Dr. Fothergill-Bourbonnais has acted as a clinical coordinator for almost 30 years and was instrumental in the development of the content of the clinical evaluation tool and its uptake by students and clinical professors.

Ms. Diane Alain

is a member of the Clinical Evaluation Taskforce for the baccalaureate program at the School of Nursing, University of Ottawa representing La Cité collégiale. Since the implementation of CEO, Ms Alain has provided ongoing informational and technical support to students, clinical teachers and clinical coordinators in French.

Dr. Betty Cragg

is a Professor and Interim Director of the School of Nursing, University of Ottawa and was Assistant Director for Undergraduate programs as CEO was being developed, piloted and implemented.

EDITOR

June Kaminski

CITATION

Ellis, J, Langford, S., Mathews, J. , Fothergill Bourbonnais, F., Alain, D. & Cragg, B. (Winter, 2011). Clinical Evaluation online: Implementation of a clinical evaluation tool into a web-based format for baccalaureate nursing students. CJNI: Canadian Journal of Nursing Informatics, 6 (1), Article One. https://cjni.net/journal/?p=1166