Theory applied to informatics – Usability

by June Kaminski, RN MSN PhD (c)

CJNI Editor in Chief

This feature is the newest entry of our column – Theory in Nursing Informatics written by our Editor in Chief, June Kaminski. Theory is an important aspect of nursing informatics – one that is often neglected due to time and context. In each column, a relevant theory will be presented and applied to various aspects of informatics in nursing practice, education, research and/or leadership.

Citation: Kaminski, J. (2020) Theory applied to informatics – Usability. Canadian Journal of Nursing Informatics, 15(4). https://cjni.net/journal/?p=8442

Usability is an important consideration when assessing the utility and user-friendliness of any piece of equipment including technology in the workplace or home and is a key consideration when using technology and equipment in health care. A standard definition for usability from the International Organization for Standardization (ISO) organization in Standard # 9241-11 states: “Usability: the extent to which a product can be used by specified users to achieve specified goals with effectiveness, efficiency and satisfaction in a specified context of use” (2018, Section 3.1).

The International Organization for Standardization (ISO) organization in Standard # 9241-11 also states:

“The word “usability” is also used as a qualifier to refer to the design knowledge, competencies, activities and design attributes that contribute to usability, such as usability expertise, usability professional, usability engineering, usability method, usability evaluation, usability heuristic” (2018, Section 3.1). According to Jakob Nielsen, “Usability is a quality attribute that assesses how easy user interfaces are to use. The word ‘usability’ also refers to methods for improving ease-of-use during the design process” (2012, p.1).

Usability Components

Usability is defined by five quality components:

Learnability: How easy is it for users to accomplish basic tasks the first time they encounter the design? “Learnability is how quickly a new or novice user learns or relearns the user interface to conduct basic tasks. Learnability is dependent on the consistency of the interface and the ability of the interface to allow exploratory learning by including undo or cancel functions. It can be measured by the time it takes to learn a new task” (Johnson et al, 2011, p. 9).

Efficiency: Once users have learned the design how quickly can they perform tasks? Efficiency is defined as the speed with which a user can complete a task or accomplish a goal. It is typically measured by the length of time required to complete a task, task success, number of keystrokes, and number of screens visited” (Johnson et al, 2011, p. 9)

Memorability: When users return to the design after a period of not using it, how easily can they reestablish proficiency? Once a user has taken the time to learn how to navigate a system and find what they are looking for; they need to be able to remember how to do it when they come back. A system needs to have high memorability. Memorability is a measure of how easy a system is to remember after a substantial time-lapse between visits. Memorability can be determined through system analytics.

Errors: How many errors do users make, how severe are these errors, and how easily can they recover from the errors? “Error tolerance refers to the ability of the system to help users avoid and recover from error. Examples of error measurement include frequency of errors and recovery rate of errors” (Johnson et al, 2011, p. 9).

Satisfaction: How pleasant is it to use the design? “Satisfaction consists of a set of subjective measures regarding a user’s perception of a system’s usefulness and impact and how likable a system is. The main measures include instruments and interviews that may measure the users’ perception of a system” (Johnson et al, 2011, p. 9).

Critical for Design

Since the advent of new technologies, usability must be considered when designing or introducing any technological device: whether wearables, mobiles, laptop, tablet or computer-based or full-fledged organizational systems.

It is important to consider all aspects of usability when designing systems for health care. “Missing critical functionalities, poor reliability of the software, or inadequate match between interface features and user tasks in general will have a strong impact on users’ ability to conduct their work, independently from the usability of the available system features” (Johnson et al, 2011, p. 8-9).

If we want nurses and other health care professionals to use a system well, usability is critical. If a system is difficult to learn, users are going to avoid it or find workarounds to try to use it quickly and efficiently. If we want patients to use a system, usability is key.

If a system is usable it promotes:

- Engagement

- Productivity

- Efficiency

- Aesthetics and Pleasure in Use

Informatics experts must work with teams to ensure all nursing components meet usability standards.

Nursing informatics encompasses working with all these varied interfaces to promote positive and health-supporting person-centred experiences.

Common Usability Problems that must be addressed

Common usability issues in health care systems such as electronic health care record systems (EHRs) include the following four considerations:

Poor organization and display of information – “To perform tasks efficiently and correctly, clinician EHR users need clear, concise, and easily accessible information, and well-integrated system functions. The limited screen space and poor interface design of some systems add to clinician’s cognitive workload and increase the risk of errors” (Johnson et al, 2011, p. 14).

Interference with practice workflow – “Research indicates the importance of EHRs matching the workflow of its users, making information easy to find and allowing for quick navigation within and across functions. Yet a chief complaint about EHR systems is that they do not integrate well with user workflows; health care providers often cite the need to change their workflow as one of the main reasons for not adopting an EHR. Unless there is compatibility between the work structure imposed by the system and routines that clinicians typically follow, there is likely to be resistance to the technology. Further, the lack of integration between systems may inhibit optimal workflows” (Johnson et al, 2011, p. 14).

Increases in cognitive burden – Clinicians are typically conducting tasks under significant time pressure and in settings that include multiple demands for their attention. Clinicians must also process a massive amount of information while remaining accurate and efficient. When combined, time pressures, conflicting demands, and information burden can lead to cognitive overload.

“The problem of cognitive burden also extends to problems with naming conventions. A lack of standard clinical nomenclatures, or poor implementation of these nomenclatures within EHRs, makes information search and retrieval difficult across systems” (Johnson et al, 2011, p. 14-15).

Poor design of system functions – “Poor documentation capability at the point of care affects the quality of information to support health care decisions as well as other key activities including compliance, external reporting and billing

Management of test results is another area of concern. Errors in managing test results can create significant harm to patients. Also, well-designed ordering capabilities are important. Certain features, such as auto-fill of medications, are more likely to improve usability by increasing efficiency and thereby increasing provider use of the system” (Johnson et al, 2011, p. 15).

Usability Evaluation

There are a variety of ways to evaluate the usability of a health care system. Two of the most common are:

Usability Testing (done with users)

Heuristic Evaluation (done with experts)

Usability Testing

This form of usability evaluation is done by recruiting actual users who will use the system (nurses, doctors, etc.) to thoroughly test the system’s functionality and interface.

“Typically, during a test, participants will try to complete typical tasks while observers watch, listen and takes notes. The goal is to identify any usability problems, collect qualitative and quantitative data and determine the participant’s satisfaction with the product” U.S. Department of Health and Human Services. (n.d.). Usability Testing.

During a usability test, the design team can:

- Learn if participants are able to complete specified tasks successfully

- Identify how long it takes to complete specified tasks

- Find out how satisfied participants are with the system

- Identify changes required to improve user performance and satisfaction

- Analyze the performance to see if it meets the usability objectives

– U.S. Department of Health and Human Services. (n.d.). Usability Testing.

There are both benefits and challenges in usability testing (Table 1)

Table 1 Usability Testing Benefits & Challenges

Moderators can collect data from Users through four techniques (Figure 1):

Figure 1: Usability Testing Moderation Techniques

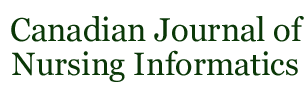

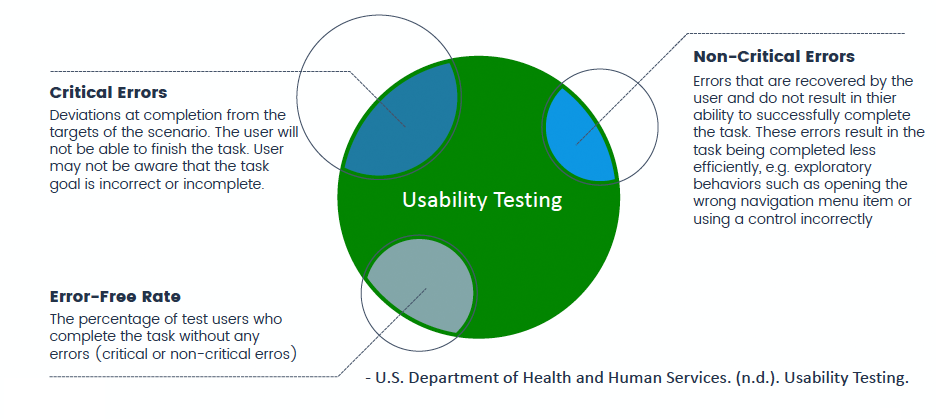

Testing Metrics can be used to look at common errors related, common tasks related and common subjective related usability factors (Figures 2, 3, 4).

Figure 2: Common Error Related Usability Testing Metrics

Figure 3: Common Task Related Usability Testing Metrics

Figure 4: Common Subjective Related Usability Testing Metrics

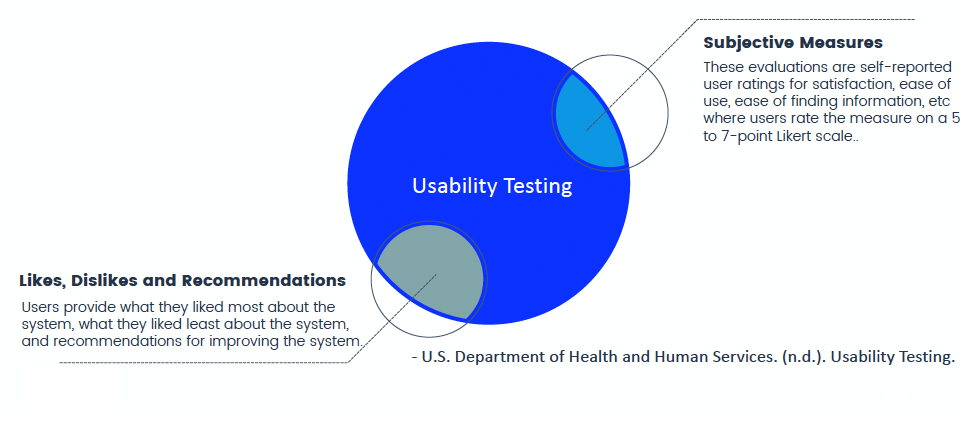

Heuristic Evaluation

Heuristic evaluation can uncover both major and minor problems not necessarily found with user testing. A small group of usability experts can identify more than 50 percent of the usability problems with a system interface based on their knowledge of human cognition and interface design rules of thumb or heuristics such as Jakob Nielsen’s usability heuristics for evaluating user interfaces.

These heuristics provide a quick and easy way of evaluating the usability of system interfaces against a set of universal design principles.

Nielsen’s Heuristic Usability Principles

1. FEEDBACK: Visibility of system status

2. METAPHOR: Match between system and the real world

3. NAVIGATION: User control and freedom

4. CONSISTENCY: Consistency and standards

5. PREVENTION: Error prevention

6. MEMORY: Recognition rather than recall

7. EFFICIENCY: Flexibility and efficiency of use

8. DESIGN: Aesthetic and minimalist design

9. RECOVERY: Help users recognize, diagnose, and recover from errors

10. HELP: Help and documentation

Basic tools needed to conduct Heuristic evaluation:

- 3-5 experts

- a set of heuristics

- a list of user tasks

- a system to test

- a standard form for recording assessment notes and recommendations

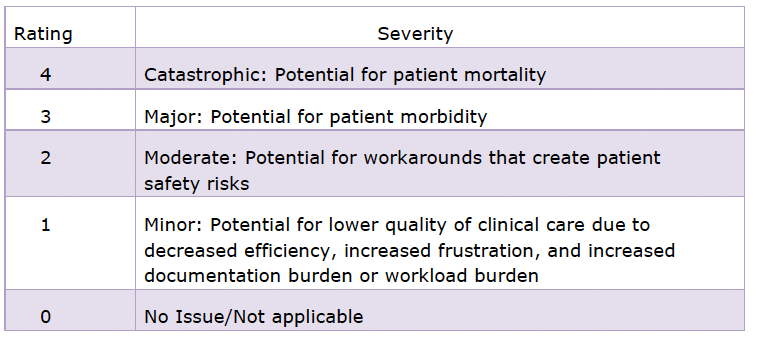

During a heuristic evaluation, the experts review the system and rate any deficiencies according to an established rating scale (Table 2).

Table 2: Sample Heuristic Evaluation Rating Scale

During a heuristic evaluation, the experts summarize their review of the system and rate any deficiencies according to an established rating scale with results similar to those below Table 3). Results for all aspects of the system are tallied and a final report is written with a summary and recommendations for improvement.

Table 3: Heuristic Evaluation Rating Example

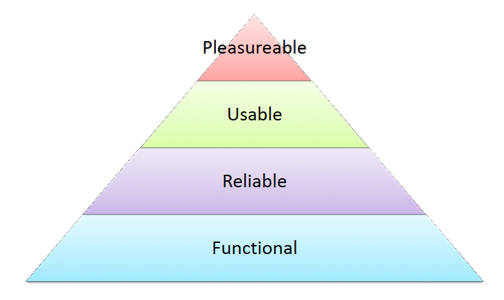

Aarron Walter (2011) theorized that superior needs (such as pleasure and delight) can only be achieved after more foundational ones (such as functionality, reliability and usability) are fulfilled (Figure 5).

Figure 5: Hierarchy of User Needs

Nursing informatics experts must be involved in the design of nursing systems to ensure that nurses and patients benefit not only from usability but from the delight and pleasure of using well-crafted, well thought out systems that support optimal health care.

References

Franklin, A., Walji, M. & Zhang, J. (2017). Expert Review, Heuristic Evaluation: Methods. University of Texas Health Science Center at Houston. [Video file}. https://youtu.be/IIT5MaVzt_Y

ISO. (2018). ISO 9241-11:2018 Guidance on Usability. In Ergonomics of human-system interaction — Part 11: Usability: Definitions and concepts. https://www.iso.org/obp/ui/#iso:std:iso:9241:-11:ed-2:v1:en

Johnson C.M., Johnston, D., Crowley, P.K., Culbertson, H., Rippen, H., Damico, D. & Plaisant, C. (2011). EHR Usability Toolkit: A Background Report on Usability and Electronic Health Records (Prepared by Westat under Contract No. 290-09-00023I-7). AHRQ Publication No. 11-0084-EF. Rockville, MD: Agency for Healthcare Research and Quality.

Nielsen, J. (2012). Usability 101: Introduction to Usability. Nielsen Norman Group. https://www.nngroup.com/articles/usability-101-introduction-to-usability/

Nielsen, J. (1995). 10 Usability Heuristics for User Interface Design. Nielsen Norman Group. https://www.nngroup.com/articles/ten-usability-heuristics/

U.S. Department of Health and Human Services. (n.d.). Usability Evaluation Basics. Usability.gov https://www.usability.gov/what-and-why/usability-evaluation.html

Walter, A. (2011). Designing for Emotion. A Book Apart, LLC